A rootkit is a collection of tools a hacker installs on a victim computer after gaining initial access. It generally consists of network sniffers, log-cleaning scripts, and trojaned replacements of core system utilities such as ps, netstat, ifconfig, and killall.

Hackers are not the only ones who are found to introduce rootkits in your machine. Recently Sony - a multi billion dollar company, was found guilty of surreptitiously installing a rootkit when a user played one of their music CDs on Windows platform.This was designed *supposedly* to stop copyright infringement. And leading to a furore world wide, they withdrew the CD from the market.

Detecting rootkits on your machine running GNU/Linux

I know of two programs which aid in detecting whether a rootkit has been installed on your machine. They are Rootkit Hunter and Chkrootkit.

Rootkit Hunter

This script will check for and detect around 58 known rootkits and a couple of sniffers and backdoors and make sure that your machine is not infected with these. It does this by running a series of tests which check for default files used by rootkits, wrong file permissions for binaries, checking the kernel modules and so on.

Rootkit Hunter is developed by Michael Boelen and has been released under a GPL licence.

Installing Rootkit Hunter is easy and involves downloading and unpacking the archive from its website and then running the installer.sh script logged in as root user.

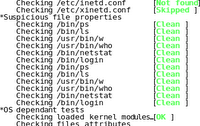

Fig: Rootkit Hunter checking for rootkits on a Linux machine.

Fig: Rootkit Hunter checking for rootkits on a Linux machine.

Once installed, you can run rootkit hunter to check for any rootkits infecting your computer using the following command:

# rkhunter -c

The binary rkhunter is installed in the /usr/local/bin directory and one needs to be logged in as root to run this program. Once the program is executed, it conducts a series of tests as follows :

- MD5 tests to check for any changes

- Checks the binaries and system tools for any rootkits

- Checks for trojan specific characteristics

- Checks for any suspicious file properties of most commonly used programs

- Carries out a couple of OS dependent tests - this is because rootkit hunter supports multiple OSes.

- Scans for any promiscuous interfaces and checks frequently used backdoor ports.

- Checks all the configuration files such as those in the /etc/rc.d directory, the history files, any suspicious hidden files and so on. For example, in my system, it gave a warning to check the files /dev/.udev and /etc/.pwd.lock .

- Does a version scan of applications which listen on any ports such as the apache web server, procmail and so on.

After all this, it outputs the results of the scan and lists the possible infected files, incorrect MD5 checksums and vulnerable applications if any.

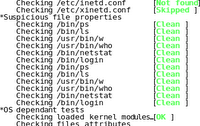

Fig: Another screenshot of rootkit hunter conducting a series of tests.

Fig: Another screenshot of rootkit hunter conducting a series of tests.

On my machine, the scanning took 175 seconds. By default, rkhunter conducts a known good check of the system. But you can also insist on a known bad check by passing the '--scan-knownbad-files' option as follows :

# rkhunter -c --scan-knownbad-files

As rkhunter relies on a database of rootkit names to detect the vulnerability of the system, it is important to check for updates of the database. This is also achieved from the command line as follows:

# rkhunter --update

Ideally, it would be better to run the above command as a cron job so that once you set it up, you can forget all about checking for the updates as the cron will do the task for you. For example, I entered the following line in my crontab file as root user.

59 23 1 * * echo "Rkhunter update check in progress";/usr/local/bin/rkhunter --update

The above line will check for updates first of every month at exactly 11:59 PM. And I will get a mail of the result in my root account.

Chkrootkit

This is another very useful program created by

Nelson Murilo and

Klaus Steding Jessen which aids in finding out any rootkits on your machine. Unlike Rootkit hunter program,

chrootkit does not come with an installer, rather you just unpack the archive and execute the program by name chrootkit. And it conducts a series of tests on a number of binary files. Just like the previous program, this also checks all the important binary files, searches for telltale signs of log files left behind by an intruder and many other tests. In fact, if you pass the option -l to this command, it will list out all the tests it will conduct on your system.

# chkrootkit -l

And if you really want to see some interesting stuff scroll across your terminal, execute the chkrootkit tool with the following option:

# chkrootkit -x

... which will run this tool in expert mode.

Rootkit Hunter and Chkrootkit together form a nice combination of tools in ones forte to detect rootkits in a machine running Linux.

Update: One reader has kindly pointed out that

Michael Boelen has handed over the Rootkit Hunter project to a group of 8 like minded developers. And the new site is located at

rkhunter.sourceforge.net

MythTV is a Free Open Source digital video recorder project distributed under the terms of the GNU GPL. In a previous post, I had written about the

MythTV is a Free Open Source digital video recorder project distributed under the terms of the GNU GPL. In a previous post, I had written about the