Google Docs - giving the competition a run for its money

Once I logged in using my Gmail id, I was pleasantly surprised at the rich interface which was loaded in my web browser. The editor took some time to load the very first time - around a minute or so but succeeding loads were quite fast. I found almost all the features that are expected in a word processor here but without the clutter and excesses you find in Ms Word.

Fig: Document as viewed within Google Docs

Fig: Document as viewed within Google Docs

In fact, the learning curve for any person who has used Ms-Word at one time or the other is practically nil as you get similar buttons and options in the tool bar and all it takes is to start typing and editing the document. As of now, Google allows you to embed an image of size not more than 2 MB but the document editor provides a lot of flexibility in placing the image within the document in relation to the flow of the paragraph.

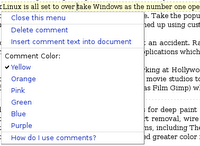

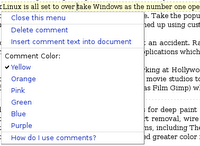

Insert comments

Another feature which really attracted me was the possibility of embedding comments within the document. The background color of the comments can be changed and Google doc automatically inserts the date and time at which the comment was made.

Fig: Change the color of the comments

Fig: Change the color of the comments

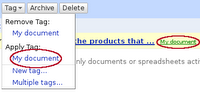

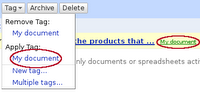

Insert Bookmarks

It is possible to provide links to different places within the document itself. Google calls these internal links bookmarks and it can aid in the creation of an index or table of contents. For example, each section in a document can be bookmarked and when you create a TOC, all the bookmarked sections will be reflected in the table.

Fig: Tag a document

Fig: Tag a document

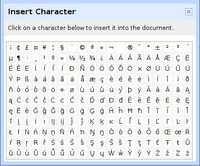

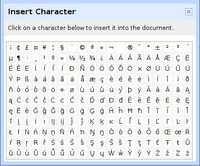

Insert special characters

Sometimes it becomes necessary to include alternate characters other than those found in English language, such as when writing the name of a Norwegian for instance. Google docs provides a separate dialog which lists all the special characters that one may find useful. And clicking on a special character will insert it in the document that is being edited.

Fig: Special character dialog

Fig: Special character dialog

Font support

The number of fonts included with the Google docs though not as many as those found in MS Word are still significant. All the most commonly used fonts such as Arial,Georgia, Tahoma and Times New Roman are included though.

Revisions

This is a feature which is god send for all those people who edit and re-edit a document many times over before they are satisfied with the document. Google has made it quite easy by making sure that all the changes are logged. This makes it possible to go back in time to a document view before the mistake was made. Or even revert back to the final edit. Also it is possible to compare two changes to the document side by side.

Fig: Revisions page allows one to move back and forth in time of the document

Fig: Revisions page allows one to move back and forth in time of the document

Collaboration

One of the most exciting feature of Google docs is that it allows a group of people separated across the net to virtually get together and collaborate on a document in real time. This is a feature which will gladden the hearts of corporate and business teams. Assuming that the document in question is not confidential, they can easily bring together a team spanning the net and work together on a document. And Google docs provides two set of powers to the users. That is a 'collaborator' and a 'viewer'. Viewers can see the same document that collaborators do but cannot edit it. Where as collaborators may edit the document and invite more collaborators and viewers. What is interesting is that Google docs even provides an RSS feed of the document changes which can be passed around.

Share your documents in three different ways

Google provides three ways of sharing your document with others. They are as follows:

- Via Email - Email the document to others using your Gmail account which is integrated with Google docs. As the logging in is now centralised in that your email id is used to log on to all the Google services, this is understood.

- Publish it as a web page - Google allows you to publish your document to the internet, where everyone will be able to access and view it online. The document will be assigned a unique address (URL) on Google.com that you can send to your friends and colleagues.

- Post to Blog - This is the most interesting one. It is possible to post your document to your personal blog. At present Google docs supports the following hosted blog services : Blogger.com, Blogharbor.com, Blogware.com, Wordpress.com, Livejournal and SquareSpace. But if you host your own website, then it allows you to publish the document to your personal blog in which case you have to select the "my own/ Custom server" choice (see figure below).

Fig: Post to a blog dialog

Fig: Post to a blog dialog

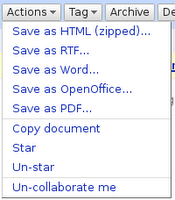

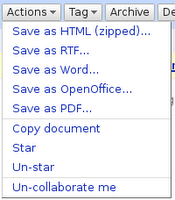

Export the documents in a number of file formats

It is possible to export the documents residing in Google docs to a variety of file formats such as RTF, PDF, Html, MS Word and OpenOffice format. But I found that the PDF format needs some more work as the resulting PDF distorts the aspect ratio of the images that are embedded in the document.

Fig: Export documents in a variety of file formats

Fig: Export documents in a variety of file formats

Fig: The save as PDF feature needs further improvement.

Fig: The save as PDF feature needs further improvement.

I have been using the erstwhile Writely for creating and editing my documents online for quite some time now. And Writely is no doubt an excellent product. By integrating it with other Google services, providing a consistent interface and by including additional features, Google has enhanced the value of this product by a great extent.

Google Spreadsheets

This is the sister product of Google Docs. And now it is possible to edit a spreadsheet while logged into the single Google account. The first time you start editing a new spreadsheet, Google will show a warning that the spreadsheet is unsaved and prompt you to turn on auto saving - for which you have to first save it once by giving it a name.

Fig: Numbers format drop down menu

Fig: Numbers format drop down menu

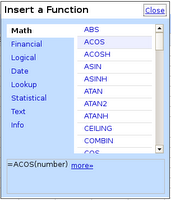

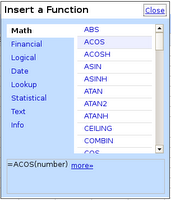

Google spreadsheet comes with a rich set of formulas. Inserting formulas in the spreadsheet is similar to what you do in any other spreadsheet product which is press the equals (=) sign and enter the formula. But it is also possible to insert a formula using the unique formula dialog (See figure).

Fig: Formula dialog

Fig: Formula dialog

Fig: Inserting a formula inside a cell is similar to that in Excel

Fig: Inserting a formula inside a cell is similar to that in Excel

But one feature that Google spreadsheet lacks is in the creation of a chart using the values included in the cells. Also it is as of now not possible to embed a spreadsheet into a Google document and vice versa.

Google even allows you to upload your documents and it imports them into your online Google docs account. But as of now you are limited to a max document size of 500kb. You can upload files of type HTML, text file, MS Word, Rich Text (RTF), Open Document Text (ODT) and Star Office. As far as spreadsheets are concerned, three file formats are supported - them being CSV (Comma separated value), Microsoft Excel and Open Document spreadsheet files.

Oh yeah, Google docs also provide a unique email with each account something like De-dd3gf2hf-Ac648m5h@prod.writely.com which is different for every user of Google docs and is not the same as the Gmail id. By sending the documents to this email as an attachment or as the body of the email, it is possible to store the documents in the Google docs account.

All in all, this is a great venture by Google to provide online equivalents of the most popular office applications namely word processors and spreadsheets. It remains to be seen how this will affect the fortunes of Microsoft considering that MS-Office is one of its flag ship products which pulls in a significant share of its net revenue.

As of now those corporates for whom confidentiality is a decisive factor might stick to MS Office or similar products as they may be squeamish about their documents residing in a Google server. But for the rest of us, this move by Google is a great leap forward as it has successfully de-linked the productivity applications from the Operating System and it is possible to view, collaborate and edit ones documents from within any OS - be it Windows, Linux, Mac OSX or any other as long as you have access to a web browser.

MythTV is a Free Open Source digital video recorder project distributed under the terms of the GNU GPL. In a previous post, I had written about the features of MythTV and listed a number of MythTV sites which provide help in installing MythTV and configuring it to work on ones machine. MythTV is a GPLed software which allows one to watch TV on ones computer. It has capability to intelligently detect commercials in the TV programs and skip through them.

MythTV is a Free Open Source digital video recorder project distributed under the terms of the GNU GPL. In a previous post, I had written about the features of MythTV and listed a number of MythTV sites which provide help in installing MythTV and configuring it to work on ones machine. MythTV is a GPLed software which allows one to watch TV on ones computer. It has capability to intelligently detect commercials in the TV programs and skip through them.