A few days back, one of my aquaintance mentioned that their office server which was running Linux got hacked. And a lot of data was lost. He was suspecting it was an inside job. This set me thinking. Normally a machine is only as secure as its environment. And how much ever robust an OS may be (Linux included) , it would still be vulnerable if certain basic guidelines are not followed. Here I will explain some of the steps which will help make your computer running Linux , secure .

Physical SecurityThe first layer of security you need to take into account is the physical security of your computer systems. Some relevant questions you could ask yourself while designing security are -

- Who has direct physical access to your machine and should they?

- Can you protect your machine from their tampering ?

How much physical security you need on your system is very dependent on your situation (company policies, critical factor of the data stored etc) , and/or budget.

If you are a home user, you probably don't need a lot (although you might need to protect your machine from tampering by children or annoying relatives). If you are in a lab, you need considerably more, but users will still need to be able to get work done on the machines. Many of the following sections will help out. If you are in an office, you may or may not need to secure your machine off-hours or while you are away. At some companies, leaving your console unsecured is a termination offense.

Obvious physical security methods such as locks on doors, cables, locked cabinets, and video surveillance are all good ideas.

Computer locksMany modern PC cases include a "locking" feature. Usually this will be a socket on the front of the case that allows you to turn an included key to a locked or unlocked position. Case locks can help prevent someone from stealing your PC, or opening up the case and directly manipulating/stealing your hardware. They can also sometimes prevent someone from rebooting your computer from their own floppy or other hardware.

These case locks do different things according to the support in the motherboard and how the case is constructed. On many PC's they make it so you have to break the case to get the case open. On some others, they will not let you plug in new keyboards or mice. Check your motherboard or case instructions for more information. This can sometimes be a very useful feature, even though the locks are usually very low-quality and can easily be defeated by attackers with locksmithing.

Some machines (most notably SPARC's and macs) have a

dongle on the back that, if you put a cable through, attackers would have to cut the cable or break the case to get into it. Just putting a padlock or combo lock through these can be a good deterrent to someone stealing your machine.

This is different from a software protection dongle which is a hardware device that is used to prevent illegal installations of software. The software is designed so that it will only operate when the hardware device is attached. Therefore only users who have the proper hardware device attached can operate the software. If the software is installed on another machine it will not work because the hardware device is not attached. Hardware keys are distributed to end users corresponding to the number of seat licenses purchased (Sorry to digress).

Figure: Hardware dongles used to secure the PC

BIOS SecurityThe BIOS is the lowest level of software that configures or manipulates your x86-based hardware. LILO and other Linux boot loaders access the BIOS to determine how to boot up your Linux machine. Other hardware that Linux runs on has similar software (Open Firmware on Macs and new Suns, Sun boot PROM, etc...). You can use your BIOS to prevent attackers from rebooting your machine and manipulating your Linux system.

Many PC BIOSs let you set a boot password. This doesn't provide all that much security (the BIOS can be reset, or removed if someone can get into the case), but might be a good deterrent (i.e. it will take time and leave traces of tampering). Similarly, on Linux (Linux for SPARC(tm) processor machines), your EEPROM can be set to require a boot-up password. This might slow attackers down.

Another risk of trusting BIOS passwords to secure your system is the default password problem. Most BIOS makers don't expect people to open up their computer and disconnect batteries if they forget their password and have equipped their BIOSes with default passwords that work regardless of your chosen password. These passwords are quite easily available from manufacturers' websites and as such a BIOS password cannot be considered adequate protection from a knowledgeable attacker.

Many x86 BIOSs also allow you to specify various other good security settings. Check your BIOS manual or look at it the next time you boot up. For example, some BIOSs disallow booting from floppy drives and some require passwords to access some BIOS features.

Note: If you have a server machine, and you set up a boot password, your machine will not boot up unattended. Keep in mind that you will need to come in and supply the password in the event of a power failure.

Boot Loader SecurityThe Linux boot loaders like LILO and GRUB also can have a boot password set. LILO, for example, has password and restricted settings; password requires password at boot time, whereas restricted requires a boot-time password only if you specify options (such as single) at the LILO prompt. Nowadays most linux distributions use GRUB as the default boot loader. GRUB also can be password protected and the password can be encrypted so that it cannot be deciphered easily by a snooper.

Keep in mind when setting all these passwords that you need to remember them. Remember that these passwords will merely slow the determined attacker. They won't prevent someone from booting from a floppy, and mounting your root partition. If you are using security in conjunction with a boot loader, you might as well disable booting from a floppy in your computer's BIOS, and password-protect the BIOS.

Note: If you are using LILO, the /etc/lilo.conf file should have permissions set to "600" (readable and writing for root only), or others will be able to read your passwords!

To password protect GRUB bootloader, insert the following line in your /boot/grub/grub.conf file.

#File: /boot/grub/grub.conf

...

password --md5 PASSWORD

...

If this is specified, GRUB disallows any interactive control, until you press the key "p" and enter a correct password. The option `--md5' tells GRUB that `PASSWORD' is in MD5 format. If it is omitted, GRUB assumes the `PASSWORD' is in clear text.

You can encrypt your password with the command `md5crypt' . For example, run the grub shell , and enter your password as shown below:

# grub

grub\> md5crypt

Password: **********

Encrypted: $1$U$JK7xFegdxWH6VuppCUSIb.

Now copy and paste the encrypted password to your configuration file.

GRUB also has a 'lock' command that will allow you to lock a partition if you don't provide the correct password. Simply add 'lock' and the partition will not be accessable until the user supplies a password.

Locking your TerminalIf you wander away from your machine from time to time, it is nice to be able to "lock" your console so that no one can tamper with, or look at, your work. Two programs that do this are: xlock and vlock. But more recent linux distributions do not ship these utilities . More specifically Fedora does not have it. If you don't have these utilities, you could also set the TMOUT variable in your bash shell. TMOUT sets the login time out for your bash shell. It is particularly important when you are working in the console. For example, I have set my TMOUT variable as follows in my .bashrc file.

#FILE: .bashrc

TMOUT=600

The value of TMOUT is in seconds. So if my machine is left idle for atleast 10 minutes , it will automatically log out from my account.

If you have xlock installed, you can run it from any xterm on your console and it will lock the display and require your password to unlock. vlock is a simple little program that allows you to lock some or all of the virtual consoles on your Linux box. You can lock just the one you are working in or all of them. If you just lock one, others can come in and use the console; they will just not be able to use your virtual console until you unlock it.

Of course locking your console will prevent someone from tampering with your work, but won't prevent them from rebooting your machine or otherwise disrupting your work. It does not prevent them from accessing your machine from another machine on the network and causing problems.

More importantly, it does not prevent someone from switching out of the X Window System entirely, and going to a normal virtual console login prompt, or to the virtual console that X11 was started from, and suspending it, thus obtaining your privileges. For this reason, you might consider only using it while under control of xdm.

Security of local devicesIf you have a webcam or a microphone attached to your system, you should consider if there is some danger of a attacker gaining access to those devices. When not in use, unplugging or removing such devices might be an option. Otherwise you should carefully read and look at any software with provides access to such devices. I have read a news item where a hacker had remotely taken control of the web cam connected to a lady's computer and he was able to view the private going-ons in her room.

Detecting Physical Security CompromisesThe first thing to always note is when your machine was rebooted. Since Linux is a robust and stable OS, the only times your machine should reboot is when you take it down for OS upgrades, hardware swapping, or the like. If your machine has rebooted without you doing it, that may be a sign that an intruder has compromised it. Many of the ways that your machine can be compromised require the intruder to reboot or power off your machine.

Check for signs of tampering on the case and computer area. Although many intruders clean traces of their presence out of logs, it's a good idea to check through them all and note any discrepancy.

It is also a good idea to store log data at a secure location, such as a dedicated log server within your well-protected network. Once a machine has been compromised, log data becomes of little use as it most likely has also been modified by the intruder.

The syslog daemon can be configured to automatically send log data to a central syslog server, but this is typically sent unencrypted, allowing an intruder to view data as it is being transferred. This may reveal information about your network that is not intended to be public. There are syslog daemons available that encrypt the data as it is being sent. Some things to check for in your logs:

- Short or incomplete logs.

- Logs containing strange timestamps.

- Logs with incorrect permissions or ownership.

- Records of reboots or restarting of services.

- Missing logs.

- su entries or logins from strange places.

Local SecurityThe next thing to take a look at is the security in your system against attacks from local users.

Getting access to a local user account is one of the first things that system intruders attempt while on their way to exploiting the root account. With lax local security, they can then "upgrade" their normal user access to root access using a variety of bugs and poorly setup local services. If you make sure your local security is tight, then the intruder will have another hurdle to jump.

Local users can also cause a lot of havoc with your system even if they really are who they say they are. Providing accounts to people you don't know or for whom you have no contact information is a very bad idea.

Creating New AccountsYou should make sure you provide user accounts with only the minimal requirements for the task they need to do. If you provide your son (age 11) with an account, you might want him to only have access to a word processor or drawing program, but be unable to delete data that is not his.

Several good rules of thumb when allowing other people legitimate access to your Linux machine:

- Give them the minimal amount of privileges they need.

- Be aware when/where they login from, or should be logging in from.

- Make sure you remove inactive accounts, which you can determine by using the 'last' command and/or checking log files for any activity by the user.

- The use of the same userid on all computers and networks is advisable to ease account maintenance, and permits easier analysis of log data. You might consider using NIS or LDAP for setting up centralised login for your users.

- The creation of group user-id's should be absolutely prohibited. User accounts also provide accountability, and this is not possible with group accounts.

Many local user accounts that are used in security compromises have not been used in months or years. Since no one is using them they, provide the ideal attack vehicle.

Root SecurityThe most sought-after account on your machine is the root (superuser) account. This account has authority over the entire machine, which may also include authority over other machines on the network. Remember that you should only use the root account for very short, specific tasks, and should mostly run as a normal user. Even small mistakes made while logged in as the root user can cause problems. The less time you are on with root privileges, the safer you will be.

Several tricks to avoid messing up your own box as root:

- When doing some complex command, try running it first in a non-destructive way...especially commands that use globing: e.g., if you want to do 'rm a*.txt', first do 'ls a*.txt' and make sure you are going to delete the files you think you are. Using echo in place of destructive commands also sometimes works.

- Provide your users with a default alias to the rm command to ask for confirmation for deletion of files.

- Only become root to do single specific tasks. If you find yourself trying to figure out how to do something, go back to a normal user shell until you are sure what needs to be done by root. Using SUDO can be a great help here in running certain super user commands like for shutting down the machine and mounting a partition.

- The command path for the root user is very important. The command path (that is, the PATH environment variable) specifies the directories in which the shell searches for programs. Try to limit the command path for the root user as much as possible, and never include '.' (which means "the current directory") in your PATH. Additionally, never have writable directories in your search path, as this can allow attackers to modify or place new binaries in your search path, allowing them to run as root the next time you run that command.

- Never use the rlogin/rsh/rexec suite of tools as root. They are subject to many sorts of attacks, and are downright dangerous when run as root. Never create a .rhosts file for root.

- The /etc/securetty file contains a list of terminals that root can login from. By default this is set to only the local virtual consoles(vtys). Be very wary of adding anything else to this file. You should be able to login remotely (using SSH) as your regular user account and then su if you need to , so there is no need to be able to login directly as root.

- Always be slow and deliberate running as root. Your actions could affect a lot of things. Think before you type!

If you absolutely need to allow someone (hopefully very trusted) to have root access to your machine, there are a few tools that can help.

SUDO allows users to use their password to access a limited set of commands as root. This would allow you to, for instance, let a user be able to eject and mount removable media on your Linux box, but have no other root privileges. sudo also keeps a log of all successful and unsuccessful sudo attempts, allowing you to track down who used what command to do what. For this reason sudo works well even in places where a number of people have root access, because it helps you keep track of changes made.

Although

sudo can be used to give specific users specific privileges for specific tasks, it does have several shortcomings. It should be used only for a limited set of tasks, like restarting a server, or adding new users. Any program that offers a shell escape will give root access to a user invoking it via sudo. This includes most editors, for example. Also, a program as harmless as

/bin/cat can be used to overwrite files, which could allow

root to be exploited. Consider sudo as a means for accountability, and don't expect it to replace the root user and still be secure.

Note : Some linux distributions like

Ubuntu deviate from this principle and makes use of sudo to do all the system administration tasks.

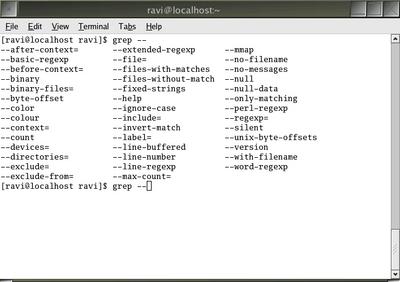

Network SecurityThis includes stopping unnecessary programs from running on your machine. For example, if you are not using telnet (and rightly so), you can disable this service in your server. Also using firewalls to restrict the flow of data across the network is very desirable (Iptables and TCPWrappers come to my mind here). An alert system or network administrator will run programs like nmap and netstat to check for and plug any holes in the network.