For tracing the system calls of a program, we have a very good tool in strace. What is unique about strace is that, when it is run in conjunction with a program, it outputs all the calls made to the kernel by the program. In many cases, a program may fail because it is unable to open a file or because of insufficient memory. And tracing the output of the program will clearly show the cause of either problem.

The use of strace is quite simple and takes the following form:

$ strace <name of the program>

For example, I can run a trace on 'ls' as follows :

$ strace ls

And this will output a great amount of data on to the screen. If it is hard to keep track of the scrolling mass of data, then there is an option to write the output of strace to a file instead which is done using the -o option. For example,

$ strace -o strace_ls_output.txt ls

... will write all the tracing output of 'ls' to the 'strace_ls_output.txt' file. Now all it requires is to open the file in a text editor and analyze the output to get the necessary clues.

It is common to find a lot of system function calls in the strace output. The most common of them being open(),write(),read(),close() and so on. But the function calls are not limited to these four as you will find many others too.

For example, if you look in the strace output of ls, you will find the following line:

open("/lib/libselinux.so.1", O_RDONLY) = 3

This means that some aspect of ls requires the library module libselinux.so.1 to be present in the /lib folder. And if the library is missing or in a different path, then that aspect of ls which depends on this library will fail to function. The line of code signifies that the opening of the library libselinux.so.1 is successful.

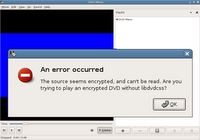

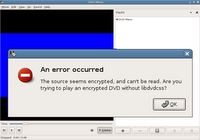

Here I will share my experience in using strace to solve a particular problem I faced. I had installed all the multimedia codecs including the libdvdcss which allowed me to play encrypted DVDs in Ubuntu Linux which I use on a daily basis. But after installing all the necessary codecs, when I tried playing a DVD movie, totem gave me an error saying that it was unable to play the movie (see the picture below). But since I knew that I had already installed libdvdcss on my machine, I was at a loss what to do.

Fig: Totem showing error saying that it cannot find libdvdcss

Fig: Totem showing error saying that it cannot find libdvdcss

Then I ran strace on totem as follows :

$ strace -o strace.totem totem

... and then opened the file strace.totem in a text editor and searched for the string libdvdcss . And not surprisingly I came across this line of output as shown in the listing below.

# Output of strace on totem

open("/etc/ld.so.cache", O_RDONLY) = 26

fstat64(26, {st_mode=S_IFREG|0644, st_size=58317, ...}) = 0

old_mmap(NULL, 58317, PROT_READ, MAP_PRIVATE, 26, 0) = 0xb645e000

close(26)

access("/etc/ld.so.nohwcap", F_OK) = -1 ENOENT (No such file or directory)

...

open("/lib/tls/i686/cmov/libdvdcss.so.2", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/tls/i686/cmov", {st_mode=S_IFDIR|0755, st_size=1560, ...}) = 0

...

stat64("/lib/i486-linux-gnu", 0xbfab4770) = -1 ENOENT (No such file or directory)

munmap(0xb645e000, 58317) = 0

open("/usr/lib/xine/plugins/1.1.1/xineplug_inp_mms.so", O_RDONLY) = 26

read(26, "\177ELF\1\1\1\0\0\0\0\0\0\0\0\0\3\0\3\0\1\0\0\0\320\27"..., 512) = 512

fstat64(26, {st_mode=S_IFREG|0644, st_size=40412, ...}) = 0

In the above listing which I have truncated for clarity, the line in bold clearly shows that totem is trying to find the library in, among other places, the '/lib/tls/i686/cmov/' directory and the return value of -1 shows that it has failed to find it. So I realized that for totem to correctly play the encrypted DVD, it has to find the libdvdcss.so.2 file in the path it is searching.

Then I used the find command to locate the library and then copy it to the directory /lib/tls/i686/cmov/. Once I accomplished this, I tried playing the DVD again in totem and it started playing without a hitch.

Fig: Totem playing an encrypted DVD Movie

Fig: Totem playing an encrypted DVD Movie

Just to make sure, I took another trace of totem and it showed that the error was rectified as shown by the bold line of output below.

# Output of the second strace on totem

open("/etc/ld.so.cache", O_RDONLY) = 26

fstat64(26, {st_mode=S_IFREG|0644, st_size=58317, ...}) = 0

old_mmap(NULL, 58317, PROT_READ, MAP_PRIVATE, 26, 0) = 0xb644d000

close(26) = 0

access("/etc/ld.so.nohwcap", F_OK) = -1 ENOENT (No such file or directory)

...

open("/lib/tls/i686/cmov/libdvdcss.so.2", O_RDONLY) = 26

...

stat64("/lib/tls/i686/sse2", 0xbffa4020) = -1 ENOENT (No such file or directory)

munmap(0xb645e000, 58317) = 0

open("/usr/lib/xine/plugins/1.1.1/xineplug_inp_mms.so", O_RDONLY) = 26

read(26, "\177ELF\1\1\1\0\0\0\0\0\0\0\0\0\3\0\3\0\1\0\0\0\360\20"..., 512) = 512

fstat64(26, {st_mode=S_IFREG|0644, st_size=28736, ...}) = 0

Opening the man page of strace, one will find scores of options. For example, if you use the option -t, then strace will prefix each line of the trace with the time of day. One can even specify the system call functions to trace using the -e option. For example, to trace only open() and close() function system calls, one can use the command as follows:

$ strace -o strace.totem -e trace=open,close totem

The ubiquitous strace should not be confused with DTrace that ships with Sun Solaris. strace is just a single tool which takes care of a small part which is tracing a single program. Where as Sun's DTrace toolkit is much more powerful and consists of a collection of scripts which can track, tune and aid the user in troubleshooting ones system in real time. More over, dtrace is a scripting language with close semblance to C/C++ and awk. Put another way, strace tool in GNU/Linux provides only one of the many functions provided by DTrace in Sun Solaris. That being said, strace plays an important part in aiding the user to troubleshoot ones programs by providing a view of the system calls that the program makes to the Linux kernel.

PS: If you are wondering which movie I was intent on watching, it is "For a Few Dollars More" - an all time classic western starring Clint Eastwood. I really like this movie.