Linux follows the philosophy that every thing is a file. For example, a keyboard, monitor, mouse, printer .... you name it and it is classified as a file in Linux. Each of these pieces of hardware have got unique file descriptors associated with it. Now this nomenclature has got its own advantages. The main one being you can use all the common command line tools you have in Linux to send, receive or manipulate data with these devices.

For example, my mouse has the file descriptor '

/dev/input/mice' associated with it (yours may be different).

So if I want to see the output of the mouse on my screen, I just enter the command :

$ cat /dev/input/mice

... and then move the mouse to get characters on the terminal. Try it out yourselves.

Note: In some cases, running the above command will scramble your terminal display. In such an outcome, you can type the command :

$ reset

... to get it corrected.

Linux provides each program that is run on it access to three important files. They are

standard input,

standard output and

standard error. And each of these special files (standard input, output and error) have got the file descriptors

0,

1 and

2 respectively. In the previous example, the utility '

cat' uses standard output which by default is the screen or the console to display the output.

- Standard Input - 0

- Standard Output - 1

- Standard Error - 2

Redirecting output to other filesYou can easily redirect input / output to any file other than the default one. This is achieved in Linux using input and output redirection symbols. These symbols are as follows:

> - Output redirection

< - Input redirection

Using a combination of these symbols and the standard file descriptors you can achieve complex redirection tasks quite easily.

Output RedirectionSuppose, I want to redirect the output of 'ls' to a text file instead of the console. This I achieve using the output redirection symbol as follows:

$ ls -l myfile.txt > test.txt

When you execute the above command, the output is redirected to a file by name test.txt. If the file 'test.txt' does not exist, then it is automatically created and the output of the command 'ls -l' is written to it. This is assuming that there is a file called myfile.txt existing in my current directory.

Now lets see what happens when we execute the same command after deleting the file myfile.txt.

$ rm myfile.txt

$ ls -l myfile.txt > test.txt

ls: myfile.txt: No such file or directory -- ERROR

What happens is that 'ls' does not find the file named myfile.txt and displays an error on the console or terminal. Now here is the fun part. You can also redirect the error generated above to another file instead of displaying on the console by using a combination of error file descriptor and output file redirection symbol as follows:

$ ls -l myfile.txt 2> test.txt

The thing to note in the above command is '2>' which can be read as - redirect the error (2) to the file test.txt.

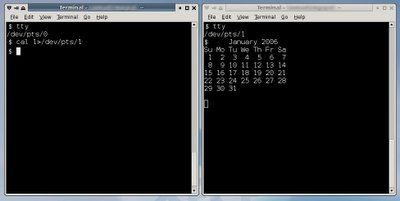

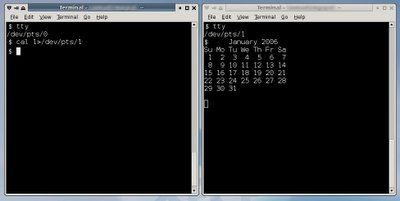

Fig: Two open xterms can be used to practice output redirection.

Fig: Two open xterms can be used to practice output redirection.

I can give one practical purpose for this error redirection which I use on a regular basis. When I am searching for a file in the whole hard disk as a normal user, I get a lot of errors such as :

find: /file/path: Permission denied

In such situations I use the error redirection to weed out these error messages as follows:

# find / -iname \* 2> /dev/null

Now all the error messages are redirected to /dev/null device and I get only the actual find results on the screen.

Note: /dev/null is a special kind of file in that its size is always zero. So what ever you write to that file will just disappear. The opposite of this file is /dev/zero which acts as an infinite source. For example, you can use /dev/zero to create a file of any size - for example, when creating a swap file for instance.

If you have a line printer connected to your Linux machine, and lets say its file descriptor is /dev/lp0 . Then you can send any output to the printer using output redirection. For example to print the contents of a text file, I do the following:

$ cat testfile.txt > /dev/lp0

Input RedirectionYou use input redirection using the

less-than symbol and it is usually used with a program which accepts user input from the keyboard. A legendary use of input redirection that I have come across is mailing the contents of a text file to another user.

$ mail ravi < mail_contents.txt

I say legendary because now with the advances in GUI, and also availability of good email clients, this method is seldom used.

Suppose you want to find the exact number of lines, number of words and characters respectively in a text file and at the same time you want to write it to another file. This is achieved using a combination of input and output redirection symbols as follows:

$ wc < my_text_file.txt > output_file.txt

What happens above is the contents of the file my_text_file.txt are passed to the command 'wc' whose output is in turn redirected to the file output_file.txt .

Appending data to a fileYou can also use the

>> symbol instead of output redirection to append data to a file. For example,

$ cat - >> test.txt

... will append what ever you write to the file

test.txt.