Tuesday, 28 February 2006

apt-pinning - Configuring Debian to run the latest packages

Sunday, 26 February 2006

Deskbar Applet - Integrating Google Search on the Linux Desktop

Deskbar is an applet available for Gnome users which incorporates all the search functions into one single utility. To start using this applet, one has to download it from the repositories which is quite simple as running the command:

Deskbar is an applet available for Gnome users which incorporates all the search functions into one single utility. To start using this applet, one has to download it from the repositories which is quite simple as running the command:

# apt-get install deskbar-applet- Excellent integration with Beagle to search for files containing a certain text.

- Open applications by just typing the name of the application in the deskbar text box.

- Yahoo search engine integration : Just type in some text in the Deskbar input residing in your panel and supposing you are online, a series of web search results pop up on your desktop .

- Google web search on your desktop : I found this really interesting because Google has not released an equivalent search tool for Linux platform yet, similar to that for windows. But to have an integrated Google search in Linux (using Deskbar of course), some additional work has to be done.

Saturday, 25 February 2006

An Indepth Review of Ext3 Journaling Filesystem

Now here is the interesting part; if you do a clean unmount of the ext3 file system, there is nothing to stop you from mounting it as a ext2 filesystem and your system will recognise the partition as well as the data in it with out any problem.

I came across this excellent analysis of ext3 journaling filesystem by Dr. Steven Tweedie which should be an informative read for people interested in the subject of filesystems. The article was writen in 2000 but I believe what has been explained there has not lost its relevence even today.

Friday, 24 February 2006

Password protect your website hosted on Apache web server

- .htpasswd - This file contains the user name - password combination of the users who are allowed access to the website or page. This file can reside anywhere other than the directory (or path) of your website. But usually, it is created in the Apache web server directory (/etc/apache2/.htpasswd). This is because, this file should not be accessible to the visitors to the site.

- .htaccess - This file defines the actual rules based on which users are given access or denied access to a particular section or whole of the website. This file should reside in the base directory of one's website. For example, if my website is located in the path '/var/www/mysite.com' and I want to provide user authentication to the entire website, then I will store the file .htaccess in the following location - '/var/www/mysite.com/.htaccess '.

# htpasswd -c /etc/apache2/.htpasswd ravi

New password: *****

Re-type new password: *****

Adding password for user ravi

#_

Now I make this .htpasswd file readable by all the users as follows:

# chmod 644 /etc/apache2/.htpasswd# touch /var/www/mysite.com/.htaccessNow I enter the following lines in the .htaccess file :AuthUserFile /etc/apache2/.htpasswd

AuthGroupFile /dev/null

AuthName MySiteWeb

AuthType Basic

require user ravi

require user ravi john

require valid-userThe .htaccess file also has to be provided the right file permissions.# chmod 644 /var/www/mysite.com/.htaccess<Directory /var/www/mysite.com/>TO

...

AllowOverride None

...

</Directory>

<Directory /var/www/mysite.com/>

...

AllowOverride AuthConfig

...

</Directory>

Monday, 20 February 2006

A concise explanation of I-Nodes

So what are I-Nodes ?

I-nodes are verily data structures which hold information about a file which is unique to the file and which helps the Operating System differentiate it from other files.

$ ls -il test.txt

148619 -rw-r--r-- 1 ravi ravi 125 2006-02-14 08:39 test.txtSuppose I create a hard link of the file test.txt as follows:

$ ln test.txt test_hardlink.txt$ ls -i test.txt test_hardlink.txt

148619 test_hardlink.txt

148619 test.txt# find / -samefile test.txt -exec rm - {} \;# find / -inum 148619 -exec rm - {} \;Typically, an i-node will contain the following data about the file:

- The user ID (UID) and group ID (GID) of the user who is the owner of the file.

- The type of file - is the file a directory or another type of special file?

- User access permissions - which users can do what with the file.

- The number of hard links to the file - as explained above.

- The size of the file

- The time the file was last modified

- The time the I-Node was last changed - if permissions or information on the file change then the I-Node is changed.

- The addresses of 13 data blocks - data blocks are where the contents of the file are placed.

- A single, double and triple indirect pointer

Thursday, 16 February 2006

Fstab in Linux

Fstab is a file in Linux or Unix which lists all the available disks and disk partitions on your machine and indicates how they should be mounted. The full path of the fstab file in Linux is /etc/fstab. Ever wonder how Linux automatically mount any or all of your partitions at boot time ? It does so by reading the parameters from the /etc/fstab file.

Syntax of fstab file

The following is the syntax of the /etc/fstab file in Linux.

[Device name | UUID] [Mount Point] [File system] [Options] [dump] [fsck order]

Device Name - Denotes the unique physical node which is identified with a particular device. In Linux and Unix, every thing is considered to be a file. That includes hard disks, mice, keyboard et al. Possible values are /dev/hdaX,/dev/sdaX,/dev/fdX, and so on. Where 'X' in /dev/hdaX and other devices stand for numerals.

UUID - This is an acronym for Universally Unique IDentifier. It is a unique string that is assigned to your device. Some modern Linux distributions such as Ubuntu and Fedora use a UID instead of a device name as the first parameter in the /etc/fstab file.

Mount Point - This denotes the full path where you want to mount the specific device. Note: Before you provide a mount point, make sure the folder exists.

File System - Linux supports lots of filesystems. Some of these options are ext2,ext3,reiserfs,ntfs-3g (For mounting Windows NTFS partitions),proc (for proc filesystem),udf,iso9660 and so on.

Options - This section contains the most number of options, simultaneously providing the highest flexibility to the user in mounting the devices connected to his machine. Check man page of fstab to know all the options. There are too many to list here.

Dump - The values dump option takes are 0 and 1. If this value is set to 0, then the dump utility will not backup the file system. If set to 1, this file system will be backed up.

fsck order - This option also takes values 0,1 and 2. 0 denotes the fsck tool does not check the file system. If the value is 1, fsck tool checks the file system on a periodic basis or when it encounters physical errors in the device.

Contents of a typical /etc/fstab file in Ubuntu Linux

The following are the contents of the /etc/fstab file on my Ubuntu machine.

proc /proc proc defaults 0 0

# /dev/sda1

UUID=dc2c5c36-3773-4627-8657-626f0ef8aa9e / ext3 relatime,errors=remount-ro 0 1

# /dev/sda2

UUID=57864470-a324-4c5c-ad49-ed1a05300b0d none swap sw 0 0

/dev/scd0 /media/cdrom0 udf,iso9660 user,noauto,exec,utf8 0 0

/dev/fd0 /mnt/floppy ext2 noauto,user 0 0

From the above listing, one can gather that the

proc file system has been mounted in the /proc directory. A UUID has been provided for /dev/sda1 and /dev/sda2. The fsck order for the root node '/' has been set to 1; which means, it will be checked for any sector errors on a periodic basis.For more details on the contents of the /etc/fstab file, check the man page of fstab in Linux.

Wednesday, 15 February 2006

Host websites on your local machine using Apache websever

Packages to be installed

apache2 , apache2-common , apache2-mpm-prefork

And optionally ...

apache2-utils , php4 (If you need PHP support) , php4-common

Configuration details

$ sudo cp -R -p websiteA /var/www/.

$ sudo cp -R -p websiteB /var/www/.#FILE: /etc/apache2/sites-available/websiteA

NameVirtualHost websiteA:80

<virtualhost websiteA:80>

ServerAdmin ravi@localhost

ServerName websiteA

DocumentRoot /var/www/websiteA/

<directory>

Options FollowSymLinks

AllowOverride None

</directory>

<directory /var/www/websiteA/>

Options Indexes FollowSymLinks MultiViews

AllowOverride None

Order allow,deny

allow from all

...

</directory>

...

...

</virtualhost> #FILE : /etc/hosts

127.0.0.1 localhost.localdomain localhost websiteA websiteB $ cd /etc/apache2/sites-enabled

$ sudo ln -s /etc/apache2/sites-available/websiteA .

$ sudo ln -s /etc/apache2/sites-available/websiteB .$ sudo apache2ctl restart

OR

$ sudo /etc/init.d/apache2 restartTuesday, 14 February 2006

Multi booting 100+ OSes on a single machine

- Partition your hard disk first before starting to install the OSes.

- Keep personal data segregated from the OS files. Put another way, it is prudent to have a separate partition for /home.

- He doesn't find any drawbacks in using one partition per linux distribution.

- Learn to boot the system manually which will give you a fair understanding of how the system is booted.

- For all the Linux OSes, he has used one common swap partition.

Additional Info

Monday, 13 February 2006

Basic setup of MySQL in GNU/Linux

$ sudo mysql

Password : *****

mysql>_mysql> GRANT ALL PRIVILEGES ON *.* TO ravi@localhost

IDENTIFIED BY 'password'

WITH GRANT OPTION;

mysql> quit$ mysql -h localhost -u ravi -p

Enter Password: *****

mysql>_Some basic SQL manipulations which I found useful

Creation of database

mysql> CREATE DATABASE mydatabasemysql> DROP DATABASE mydatabasemysql> SHOW TABLES;mysql> USE my_other_databaseResources

Official MySQL documentation online.

Also read : MySQL Cheat Sheet

Saturday, 11 February 2006

Integrating SSH in Gnu/Linux

Friday, 10 February 2006

XGL - An Xserver framework based on OpenGL

- Showcasing the power of OpenGL which will grab the attention of the large number of game developers who then will hopefully consider developing cross platform games using OpenGL instead of the Windows centric games that are developed at present using DirectX.

- You can show these special effects to your friends and members of your family and I can bet my shirt that they will be asking you to help them in installing Linux on their machine. This means, a lot of effort is reduced in persuading people to embrace Linux over any other proprietary OS.

- If you are using your Linux machine for entertainment and fun, these special effects will be a good time pass.

- I strongly feel that it is projects such as these that will be the tipping point in enabling Linux to grab a major slice of the OS market.

Wednesday, 8 February 2006

A collection of books, howtos and documentation on GNU/Linux for offline use

# apt-get install <package name>- apt-doc - A detailed documentation on apt package management.

- apt-howto - A HOW-TO on the popular subject of apt package management.

- apt-dpkg-ref - This is a apt dpkg quick reference sheet which forms a handy reference for those who find difficult to memorize the commands.

- bash-doc - The complete documentation for bash in info format.

- absguide - This is an advanced bash scripting guide which explores the art of shell scripting. It serves as a textbook, a manual for self-study, and a reference and source of knowledge on shell scripting techniques. The exercises and heavily-commented examples invite active reader participation, under the premise that the only way to really learn scripting is to write scripts.

- debian-installer-manual - This package contains the Debian installation manual, in a variety of languages. It also includes the installation HOWTO, and a variety of developer documentation for the Debian Installer.This is the right documentation to read if you are thinking of installing Debian on your machine.

- debian-reference - This book covers many aspects of system administration through shell command examples. The book is hosted online at qref.sourceforge.net but by installing this package, you get to read the whole book offline.

- doc-linux-text and doc-linux-nonfree-html - Any long time Linux user will know that in the past the HOW-TOs and FAQs formed the life line of anybody hoping to install and configure Linux on their machine. This was especially true when the machine had some obscure hardware. These packages download all the HOW-TOs and FAQs hosted on the tldp.org site and make available for offline use.

- linux-doc - This package makes available the Linux kernel documentation. Might come handy if you intend to compile a kernel yourself or you want to dig deep into understanding the working of the kernel.

- hwb - This is a hardware book which contains miscellaneous technical information about computers and other electronic devices. Among other things, you will find the pin out to most common and uncommon connectors available as well as information about how to build cables.

- rutebook - Rute's Exposition is one of the finest books available for any aspiring and even established Linux user. It is actually a book available on print but the author has released it online for free. You may read this book online too but by downloading this package, you get to read the book offline. (I highly recommend reading this book by anyone interested in using Linux).

- grokking-the-gimp - Linux has a top class graphics suite in Gimp. I have been using gimp to manipulate images published on this site as well as for other uses. This book which is also available online, covers all the aspects of working with Gimp in detail, all the while giving excellent practical examples interspersed with images. You may install this package if you wish to read the whole book offline. It is a 24 MB download though.

$ dpkg -L <package name>Tuesday, 7 February 2006

Setting up a Mail Server on a GNU/Linux system

Monday, 6 February 2006

Upgrading to Firefox 1.5 in Ubuntu Linux

- Install libstdc++5 library. Ubuntu breezy has a later version (6.0) of this library and firefox 1.5 requires the earlier version.

- Download the firefox 1.5 package from the official Firefox website and unpack it into the /opt directory. (Usually this is all that is required to get firefox 1.5 running). But for greater integration with other programs as well as importing your bookmarks from the earlier version of the web browser, some additional work has to be done.

- Link to your plug-ins

- Rename your old profile in your home directory leaving it as a backup.

- To ensure that firefox 1.5 is the default browser, modify the symbolic link using the dpkg-divert command.

Sunday, 5 February 2006

Book Review: Linux Patch management - Keeping Linux systems up to date

Any system or network administrator will know the importance of applying patches to the various software running on their servers be it the numerous bug fixes or upgrades. Now when you are maintaining just a single machine, this is really a simple affair of downloading the patches and applying them on your machine. But what happens when you are managing multiple servers and hundreds of client machines? How do you keep all these machines under your control up to date with the latest bug fixes? Obviously, it is a waste of time and bandwidth to individually download all the patches and security fixes for each machine. This is where this book named "Linux Patch Management - Keeping Linux systems up to date" authored by Michael Jang gains significance. This book released under the Bruce Perens' open source series aims to address the topic of patch management in detail.

Any system or network administrator will know the importance of applying patches to the various software running on their servers be it the numerous bug fixes or upgrades. Now when you are maintaining just a single machine, this is really a simple affair of downloading the patches and applying them on your machine. But what happens when you are managing multiple servers and hundreds of client machines? How do you keep all these machines under your control up to date with the latest bug fixes? Obviously, it is a waste of time and bandwidth to individually download all the patches and security fixes for each machine. This is where this book named "Linux Patch Management - Keeping Linux systems up to date" authored by Michael Jang gains significance. This book released under the Bruce Perens' open source series aims to address the topic of patch management in detail.The second chapter deals exclusively with patch management on Red Hat and Fedora based Linux machines. Here the author walks the readers through creating a local Fedora repository. Maintaining a repository locally is not about just downloading all the packages to a directory on your local machine and hosting that directory on the network. You have to deal with a lot of issues here, like the hardware requirements, the kind of partition arrangement to make, what space to allocate to each partition, whether you need a proxy server and more. In this chapter, the author throws light on all these aspects in the process of creating the repositories. I really liked the section where the author describes in detail the steps needed to configure a Red Hat network proxy server.

The third chapter of this book namely SUSE's Update Systems and rsync mirrors describes in detail how one can manage patches with YaST. What is up2date for Red Hat is YaST for SuSE. And around 34 pages have been exclusively allocated for explaining each and every aspect of updating SuSE Linux using various methods like YaST Online Update and using rsync to configure a YaST patch management mirror for your LAN. But the highlight of this chapter is the explanation of Novell's unique way of managing the life cycle of Linux systems which goes by the name ZENworks Linux Management (ZLM). Even though the author does not go into the details of ZLM, he gives a fair idea about this new topic including accomplishing such basic tasks as installing the ZLM server, configuring the web interface, adding clients ... so on and so forth.

Ask any Debian user what he feels is the most important and useful feature of this OS, then in 90 percent of the cases, you will get the answer that it is Debian's contribution to a superior package management. The fourth chapter takes an in depth look into the working of apt. Usually a Debian user is exposed to just a few of the apt tools. In this chapter though, the author explains all the tools bundled with apt which makes this chapter a ready reference for any person managing Debian based system(s).

If the fourth chapter concentrated on apt for Debian systems, the next chapter explores how the same apt package management utility could be used to maintain Red Hat based Linux distributions.

One of the biggest complaints of users of Red Hat based Linux distributions a few years back was a lack of a robust package management tool in the same league as apt. To address this need, a group of developers created an alternative called YUM. The last two chapters of this book explores how one can use YUM to keep the system up to date as well as hosting ones own YUM repository on the LAN.

Chapters at a glance

- Patch Management Systems

- Consolidating Patches on a Red Hat/Fedora Network

- SUSE's Update Systems and rsync Mirrors

- Making apt Work for You

- Configuring apt for RPM Distributions

- Configuring a yum Client

- Setting up a yum Repository

Michael Jang has specialized in networks and operating systems. He has written books on four Linux certifications and one of them on RHCE is very popular among students attempting to get Red Hat certified. He also holds a number of certifications such as RHCE, SAIR Linux Certified Professional, CompTIA Linux+ Professional and MCP.

Book Specifications

Name : Linux Patch Management - Keeping Linux Systems Up To Date

Author : Michael Jang

Publisher : Prentice Hall

Price : Check at Amazon.com

No of pages : 270

Additional Info : Includes 45 days Free access to Safari Online edition of this book

Ratings: 4 stars out of 5

Things I like about this book

- Each chapter of the book explores a particular tool to achieve patch management in Linux and the author gives in depth explanation of the usage of the tool.

- All Linux users irrespective of which Linux distribution they use will find this book very useful to host their own local repositories because the author covers all distribution specific tools barring Gentoo in this book.

- The book is peppered with lots of examples and walk throughs which makes this an all in one reference on the subject of Linux patch management.

- I especially liked the chapter on apt package management which explored many useful commands which are seldom used or known by the greater percentage of people.

Friday, 3 February 2006

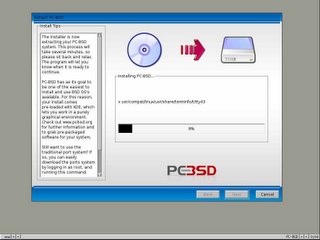

PC-BSD : A user friendly BSD flavour geared for the desktop

Advantages of PC-BSD

- A very easy to use GUI enabled installation process unlike the text installer found in FreeBSD.

- Is geared for the desktop user but with all the power, stability and security of FreeBSD. BTW, FreeBSD was around when Linux was not even born, which should give you a fair idea about this OS.

- Installing and uninstalling software is a point - and - click affair and will gladden the hearts of neophytes and Windows users alike.

- Bundles two FreeBSD kernels - a single processor kernel and a SMP enabled kernel. And the user can easily switch between the two (See system configuration dialog figure above).

- One drawback I found was the limited amount of software available on their site. But I consider that as a temporary phenomenon and is bound to change in the future as they continuously add more software.

- The GUI even though very clean and intutive at the present stage is not without its quirks. For example, I installed bash shell by the point and click method. But when I tried to change my default shell to bash from the User Manager widget (see figure above), the bash shell was not available for selection in the drop down box. And I had to do it the command line way. But I am sure such minor matters would be sorted out as more and more people try out PC-BSD.

- Also some people talk about the obvious blot in installing software using PC-BSD click and install method because there is some duplication of the common libraries used by the various software.

But seriously, for a person who has dedicated his full 80 GB hard disk or even half or quarter of that space for running PC-BSD, it is not a serious issue.

Thursday, 2 February 2006

Bruce Peren's forcasts for 2006

- Java will be overshadowed by newer entries like Ruby on rails in the enterprise arena.

- Native Linux API will play a dominant role in the embedded market - especially in creating applications that run on cell phones.

- Cellular carriers (companies) will lose significance and will be just a means to an end. And customers will not be tied down to a particular cellular company.

- Feature phones will prosper only in cities where people overtly use mass transit and nowhere else.

- And lastly, Mr Perens feels that PHP will die a slow death.

Wednesday, 1 February 2006

WINE vs Windows XP benchmarks

Wine is a recursive acronym for "WINE is not an Emulator" and is designed to run on Linux, software, which run natively only on windows. Specifically, Wine implements the Win32 API (Application Programming Interface) library calls thus one can do away with the Windows OS as such. In the past, I have successfully run Photoshop, MS Word and a few Windows games in Linux using Wine. At times, I have noticed a remarkable performance improvement while running a particular software using wine when compared to running it on windows. Now Tom Wickline has posted the results of the benchmark tests he carried out comparing Windows XP and Wine.

Wine is a recursive acronym for "WINE is not an Emulator" and is designed to run on Linux, software, which run natively only on windows. Specifically, Wine implements the Win32 API (Application Programming Interface) library calls thus one can do away with the Windows OS as such. In the past, I have successfully run Photoshop, MS Word and a few Windows games in Linux using Wine. At times, I have noticed a remarkable performance improvement while running a particular software using wine when compared to running it on windows. Now Tom Wickline has posted the results of the benchmark tests he carried out comparing Windows XP and Wine.